Agile Testing, the ABCs….

QA Testing Process Goals:

- Expose inconsistencies

- Remove errors and redundancies

- Tests in real-life environment

- Ensures project survival & longevity

Agile teams can’t successfully deliver value frequently, at a sustainable pace, without a great deal of automation. Teams who want to practice continuous delivery need automation to maintain the right level of quality.

from: https://agiletester.ca/whats-books-whats-not/

I quite agree with this testing manifesto

Testing throughout OVER at the end

Preventing bugs OVER Finding bugs

Testing Understanding OVER checking functionality

Building the best system OVER breaking the system

Team responsibility for quality OVER Tester responsibility

Goal of Quality and Testing

Quality: deliver the best value and experience for the user

Testing: Defect Prevention! (not detection)

Cost of defect fixing in phase:

Key take-aways

- Traditional defect detection is an expensive way to ensure quality. Defect prevention is the ultimate goal. Just think if we could only have had today’s forward looking radar on the bow of the Titanic.

- Start as early as you can with Software Craftsmanship and adoption of Test Driven Development. Agile organizations are continually working toward bulletproof quality, full stack test automation, CI & CD, and the sooner you can get started the better.

- The goal is to not just “do Agile”, but to “be agile” and escaped defects can be used as a barometer for progress towards that agility. As the number of them goes down, agility goes up.

Phases from specification to production

- Development: Story Specification —> Acceptance Test Driven Development (ATDD, TDD, BDD)

- Quality Verification: End-to-end verified —> Automated & Exploratory

- Business Verification: Business demo —> Product Owner verified

Agile testing cycle from: https://usersnap.com/blog/agile-testing/

Planning Essentials in testing of web applications

1. Requirement Analysis phase

excerpt from: https://agiletester.ca/test-planning-cheat-sheet/

1. Value to users/business

- What’s the purpose of the story?

- What problem will it solve for the user, for us as a business?

- How will we know the feature is successful once it is released? What can we measure, in what timeframe? Do we need any new analytics to get usage metrics in production?

2. Feature behaviour

- What are the business rules?

- Get at least one happy path example for each rule, and ideally also one misbehavior example

- Who will use this feature? What persona, what particular job is a person doing?

- What will users do before using this feature? Afterward?

- What’s the worst thing that could happen when someone uses it? (exposes risks)

- What is the the best thing that can happen? (delighting the customer)

- Is the story / feature / epic too big? Can we deliver a thin slice and get feedback?

- Watch out for scope creep and gold–plating!

3. Quality Attributes

- Could this affect performance? How will we test for that?

- Could this introduce security vulnerabilities? How will we test for that?

- Could the story introduce any accessibility issues?

- What quality attributes are important for this feature, for the context?

4. Risks

- Are there new API endpoints or server commands? Will they follow current patterns?

- Do we have all the expertise for this on our team? Should we get help from outside?

- Is this behind a feature flag? Will automated tests run with the feature flag on as well as off?

- Are mobile/web at risk of being affected?

- Are there impacts to other parts of the system?

5. Testability

- How will we test this?

- What automated tests will it have – unit, integration, smoke?

- Do we need a lot of exploratory testing?

- Should we write a high level exploratory testing charter for where to focus testing for this feature/epic?

- Do we have the right data to test this?

- Does it require updating existing tests, or adding new ones? If new ones, is there any learning curve for a new technology?

User Stories should include:

- The “User Story”

- The Who, What, Why of the feature request)As a [user type], I want [some goal] so that [some reason].

- Business rules/Requirements:

- Review for ambiguous, incomplete, misinterpreted, …

- Acceptance Criteria:

- see: https://www.leadingagile.com/2014/09/acceptance-criteria/

- Criteria should state intent, but not a solution. (e.g., “User can approve or reject an invoice” rather than “User can click a checkbox to approve an invoice”). The criteria should be independent of the implementation, and discuss WHAT to expect, and not HOW to implement the functionality.

- User Scenarios: Given/When/Then

- Given some precondition When I do some action Then I expect some result

- Identifying real user scenarios

- used as guide for testing end-to-end but also helps to also find usability issues against functionality

- Consistency between features and functionality across the app

2. TEST PLANNING PHASE

Questions that should be asked by the team when defining business rules;

- Scalability

- response time,

- screen transitions,

- throughput,

- requests per seconds,

- network usage,

- memory usage,

- the time it takes to execute tasks, among others.

- Performance ((load balancing, page loading time, Spikes in traffic, …)

- Device and Platforms supported (Browser and responsive issues, screen sizes,)

- Common user conditions (similar popular features in the market, competitive edge,…)

- User forms and input issues (ex. search queries, login, …)

- Security checks (user roles, permissions, …)

- Collect web and mobile traffic data (Metrics, how will feature delivered be measured?, success rate,…)

- Monitoring (fail safe checks ?…)

- Visual checks (“don’t make me think” testing!, …is design intuitive, legible, scrolling …)

- popups

- Previous technical debt or known bugs to fix

3. DEVELOPMENT / TESTING PHASE

I purposely bind the development and testing together as it is not a linear process. (at least it is not meant to be a truly agile world)

- Isolate the apps to be tested independently (ie. use mocking to test front-end functionality/UI, …

Here are some key principals in development

- Design with the user in mind

- Understand the existing ecosystem and infrastructure

- Design for scale

- Build for sustainability

- Be data driven

- Use open standards

- Reuse and improve (recycle!)

- Address privacy and security issues

- Be collaborative

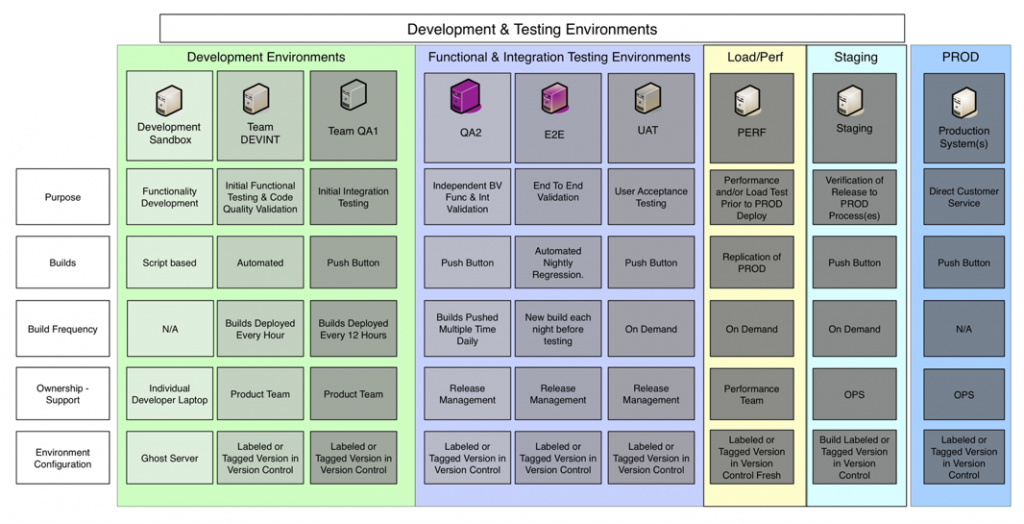

About Environments…

Here is an image of a fully loaded ensemble of environments. Generally I often see the following 5 environments:

- Local Dev

- Coding

- Unit tests

- Dev

- Merged code

- Unit/integration/api tests

- QA or Test

- Code deployed

- Mainly used to test feature functionality

- Control of content for automation (critical)

- Exploratory, E2E testing, integration/API testing,

- Other apps not being tested are usually pointing to Staging or Prod systems

- Feature branch testing can be done here

- Signon process. ex. SAML/SSO (single sign-on) or others can easily be disabled if tests do not require it.

- see: https://www.ubisecure.com/uncategorized/difference-between-saml-and-oauth/ for more info.

- Staging

- Mainly used to test the deployment process and tasks

- Identical to Prod in all the ecosystem (load balancer, disk space, RAM, permissions, etc…)

- Automation testing with Prod content but some content can be QA specified

- Used for load/performance tests,

- All systems to be tested together, UAT is done here

- Feature can be pre-approved here

- Bugs found here are usually due to environment vs functional

- Production

Difference between Manual and Automated Testing

| Manual Testing | Automated Testing |

|---|---|

| Human errors can reduce the accuracy, making this method less reliable | Testing performed using tools and scripts makes this method more reliable |

| Manual testing requires a dedicated team of individuals, this makes the process time consuming | It is relatively faster as the testing is done using software tools, making it better than a manual process |

| You need to invest in hiring the right members for your team | You just need to invest in acquiring testing tools |

| Better suited for a project where test cases are run once or twice and there is no need for repetition frequently | It is a practical choice when the test cases need to run regularly over a significant amount of time |

| Human observation can help evaluate the user-friendliness quotient and help in achieving a better experience for the end user | User-friendliness and end-user experience cannot be defined due to the lack of human observation |

Testing types

| Manual Testing | Automated Testing |

|---|---|

| Exploratory Testing: This scenario requires a tester’s expertise, creativity, knowledge, analytical and logical reasoning skills. With poorly written specifications and short execution time, human skills are a must to test in this scenario | Regression Testing: Automated method is better here because the code changes frequently and the regressions can be run in a timely manner |

| Ad-Hoc Testing: It is an unplanned method of testing where the biggest difference maker is a tester’s insight that can work without a specific approach | Repeated Execution: When you need to execute a use case repeatedly, automated testing is a better option |

| Usability Testing: Here you need to check the level of user-friendliness and check the software for convenience. Human observation is a must to make the end user’s experience convenient | Performance: You need an automated method when thousands of concurrent users are simulated at the same time. Additionally, it is a better solution for load testing. |

Agile Testing quadrants

Most projects would start with Q2 tests, because those are where you get the examples that turn into specifications and tests that drive coding, along with prototypes and the like.

Technology Facing & Supporting the Team (Q1)

- Are we building the product right ?

- Automated (unit, component, Integration tests)

Business Facing & Supporting the Team (Q2)

- Are we building the right product ?

- Automated & Manual (examples, functional, prototypes, story tests, simulations)

Business Facing & Critiquing the Product (Q3)

- Are we building the right product ?

- Manual (Exploratory, Scenarios, usability testing, UAT, Alpha/Beta)

Technology Facing & Critiquing the Product (Q4)

- Are we building the product right ?

- Tools (performance, security, “ility” testing)

Important factors to keep in mind for a successful QA in your organizations

1- Plan the testing process:

Create a test plan specific to a level of testing (e.g. system testing, unit testing, etc.).

Test levels should emphasise on how the testing schema and project test plan apply to different levels of testing and state exceptions if present.

A test plan must contain scope of the testing and all the assumptions that are made.

Completion criteria need to be specified to determine when the specific level of testing is complete.

2- Involve QA from the beginning:

QA Engineers should be involved in the project from the initial stage of the Software Development Life Cycle (SDLC)i.e., Requirement gathering. This helps identify the possible bug prone areas and design the software so that these areas are properly and efficiently managed.

Later stage involvement of the tester may affect the quality of the software as the design may not allow the changes which are required to remove the bugs forcing you to compromise in certain areas.

3- Document, Document, Document:

Documentation is a major step of the testing process. Documentation always helps the testers to get into the project detail in-depth.

To understand the project, documenting the project requirements is a very useful exercise.

Most companies do not take documentation very seriously at the early stages of the project which results in critical problems in the later phase.

Especially if a new tester joins any ongoing project, proper documentation of that project will help him/her to get on top of the project very easily.

4- Acquire domain knowledge:

QA is the users proxy in the development organization. For a QA person it is critical that he:

Acquires good domain knowledge. He needs to quickly grasp as many concepts as he can.

Review online resources about the domain on which the test application is based.

If possible, get training on the domain or meet the domain experts to advice.

5- Communicate to bridge the Developer-QA gap:

Developers and QA come from different planets – developers are happy if a software works once, QA is happy if it breaks once. So a communication gap is to be expected. However, this gap will result in lack of in-depth knowledge of the testing application and which in turn will create unidentified defects in the application.

Communication between Development and QA affects the overall productivity of the project

More knowledge, more transparency of an ongoing project always helps the testing process.

Regular meetings can be beneficial to track the status of the project.

To get the in-depth knowledge of a project, one should not have any communication gap between the testers and the business team as well.

6- Organizations should arrange for formal domain training sessions for the QA team. (both the business and technical perspectives)

Important reads:

- https://agiletester.ca/blog/

- https://lisacrispin.com/

- https://janetgregory.ca/

- https://www.mabl.com/

- https://mavericktester.com/

references: (excerpts from)

- https://lisacrispin.com/2011/11/08/using-the-agile-testing-quadrants/

- https://usersnap.com/blog/agile-testing/

- https://www.mabl.com/blog/changing-roles-of-testers-in-agile-and-devops

- https://www.leadingagile.com/2018/09/escaped-defects/

- https://www.grazitti.com/blog/manual-vs-automated-quality-assurance-testing-which-one-is-a-better-fit-for-your-testing-process/

- https://www.grazitti.com/blog/web-application-testing-issues-you-cant-afford-to-miss/

- https://www.grazitti.com/blog/qa-checklist-for-responsive-pages/

https://xbosoft.com/blog/agile-testing-principle-habit-5-think-tasks/